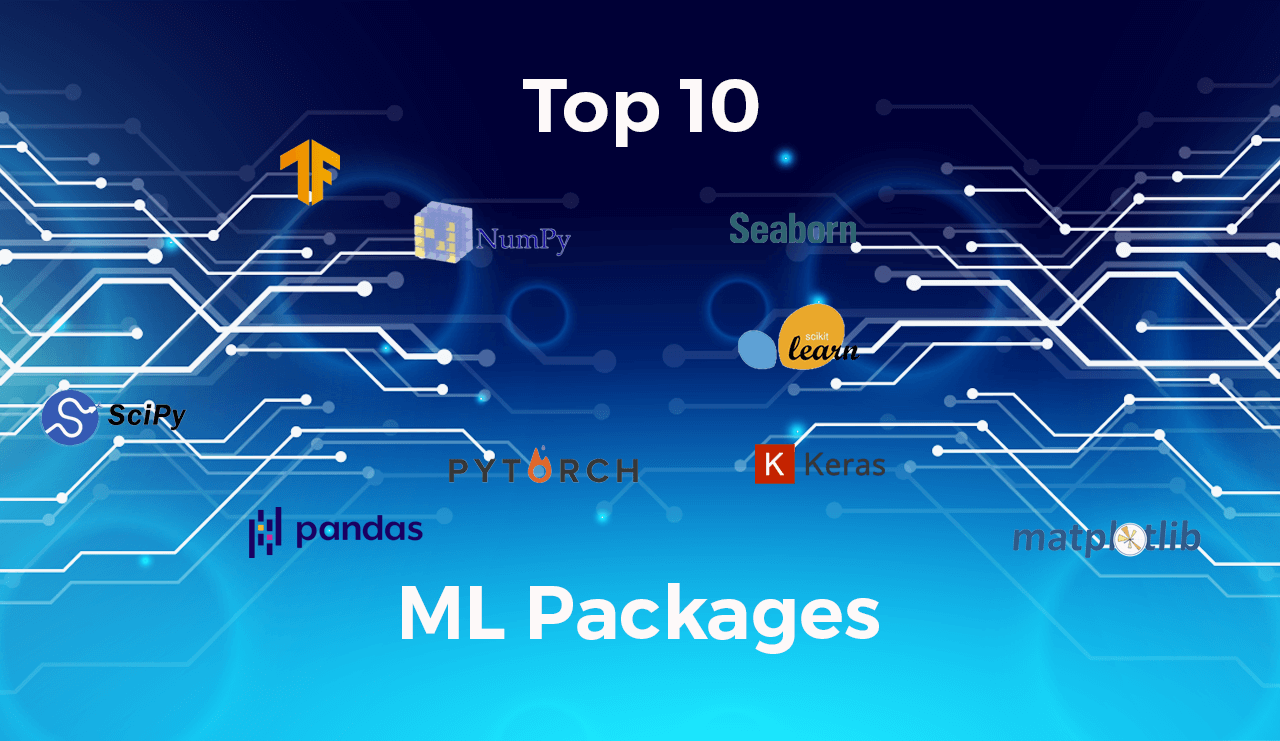

All Python Machine Learning and Deep Learning Libraries and Frameworks

By: Abolfazl Mohammadijoo | Date: Dec. 8, 2024

Various libraries and frameworks have emerged to facilitate machine learning and deep learning tasks in the rapidly evolving landscape of artificial intelligence. This article compares several prominent libraries, detailing their characteristics, history, real-world applications, advantages, disadvantages, and use cases.

TensorFlow

Type: Deep Learning

History: TensorFlow was developed by the Google Brain team and released as an open-source library in November 2015. It was created to support Google's machine learning and deep learning projects, enabling researchers and developers to build complex models efficiently.

Real-World Use: TensorFlow is widely used in various applications, including Google Search, Google Photos, and speech recognition systems. It is still actively maintained and widely adopted in both industry and academia.

Advantages:

-

Flexibility: Supports both high-level APIs (like Keras) and low-level operations.

-

Scalability: Can be deployed across multiple CPUs and GPUs.

-

Community Support: Extensive documentation and a large community of users.

Disadvantages:

-

Steep Learning Curve: The complexity of its architecture can be daunting for beginners.

-

Verbose Syntax: Requires more lines of code compared to some other libraries.

Use Cases: Ideal for large-scale machine learning models, production systems, and research requiring custom implementations.

Keras

Type: Deep Learning

History: Keras was developed by François Chollet and released in March 2015. Initially designed as a high-level API for building neural networks, it can run on top of TensorFlow, Theano, or Microsoft CNTK.

Real-World Use: Keras is popular among researchers and developers for rapid prototyping. Companies like Netflix and Uber utilize Keras for various machine learning tasks. It remains actively used today.

Advantages:

-

User-Friendly: Simple syntax makes it accessible to beginners.

-

Rapid Prototyping: Facilitates quick experimentation with different neural network architectures.

Disadvantages:

-

Limited Advanced Features: May not support some complex tasks as effectively as lower-level frameworks.

-

Performance Overhead: Slower compared to using TensorFlow directly due to its high-level nature.

Use Cases: Best suited for beginners or projects requiring fast model development without extensive customization.

PyTorch

Type: Deep Learning

History: PyTorch was developed by Facebook’s AI Research lab and released in October 2016. Its dynamic computational graph capabilities have gained popularity, making it ideal for research purposes.

Real-World Use: Widely used in academia for research in natural language processing (NLP) and computer vision. Companies like Tesla use PyTorch for autonomous driving technologies. It continues to see growing adoption.

Advantages:

-

Dynamic Computation Graphs: Allows for more flexibility during model training.

-

Ease of Debugging: Provides intuitive debugging capabilities with Pythonic code structure.

Disadvantages:

-

Less Mature Deployment Tools: Compared to TensorFlow, deployment can be more challenging.

-

Performance Variability: This may require optimization for production environments.

Use Cases: Preferred in research settings where flexibility is crucial or projects requiring rapid iteration on models.

Scikit-Learn

Type: Machine Learning

History: Developed initially by David Cournapeau as part of the Google Summer of Code project in 2007, Scikit-Learn has grown into a robust library for classical machine learning algorithms.

Real-World Use: Used by companies like Spotify and Airbnb for recommendation systems and data analysis. It remains actively maintained and is widely used in educational contexts.

Advantages:

-

Comprehensive Documentation: Extensive resources make it beginner-friendly.

-

Wide Range of Algorithms: Supports numerous algorithms for classification, regression, clustering, etc.

Disadvantages:

-

Limited Deep Learning Support: Not designed for deep learning tasks; better suited for traditional machine learning.

-

Performance Constraints on Large Datasets: This may not perform optimally with very large datasets compared to specialized libraries.

Use Cases: Ideal for traditional machine learning tasks such as predictive modeling and data analysis on smaller datasets.

Theano

Type: Deep Learning

History: Developed by the Montreal Institute for Learning Algorithms (MILA) in 2007, Theano was one of the first libraries designed to facilitate deep learning research but ceased major development in 2017.

Real-World Use: While Theano laid the groundwork for many subsequent frameworks (including Keras), it is largely deprecated and has limited ongoing use in new projects.

Advantages:

-

Efficient Computation: Optimizes mathematical expressions involving multi-dimensional arrays.

Disadvantages:

-

Lack of Updates: No longer actively maintained; users are encouraged to transition to other frameworks.

Use Cases: Historically used in academic research but now largely replaced by TensorFlow or PyTorch.

MXNet

Type: Deep Learning

History: Developed by Apache Software Foundation in 2015, MXNet gained traction as a scalable deep learning framework favored by Amazon Web Services (AWS).

Real-World Use: Used extensively within AWS services; companies like Uber leverage MXNet for deep learning applications. It remains supported but is less popular than TensorFlow or PyTorch.

Advantages:

-

Scalability: Efficient at running on multiple GPUs.

Disadvantages:

-

Smaller Community: Less community support compared to larger frameworks.

Use Cases: Suitable for large-scale deep learning applications requiring efficient resource management.

Pandas

Type: Data Manipulation Library

History: Created by Wes McKinney in 2008 while working at AQR Capital Management, Pandas has become a staple library for data manipulation in Python.

Real-World Use: Used by data scientists across industries including finance and healthcare. Actively maintained with widespread adoption in data analysis tasks.

Advantages:

-

Powerful Data Structures: Provides DataFrames which simplify data manipulation tasks.

Disadvantages:

-

Memory Intensive: Can struggle with very large datasets due to memory constraints.

Use Cases: Ideal for data cleaning, transformation, and exploratory data analysis before modeling.

NumPy

Type: Numerical Computing Library

History: Created by Travis Oliphant in 2005 as an evolution of Numeric and Numarray libraries. NumPy serves as the foundation for many scientific computing libraries in Python.

Real-World Use: Widely used across scientific computing domains including finance, physics, and engineering. Continues to be actively developed and used extensively.

Advantages:

-

Fast Array Computations: Optimized performance with multi-dimensional arrays.

Disadvantages:

-

Limited Functionality on Its Own: Primarily serves as a numerical foundation; additional libraries are needed for advanced tasks.

Use Cases: Essential for numerical computations that serve as a backbone for other libraries like Pandas and SciPy.

SciPy

Type: Scientific Computing Library

History: Developed alongside NumPy, SciPy was created by Travis Oliphant et al. around 2001 to provide additional functionality beyond NumPy's capabilities.

Real-World Use: Utilized extensively in scientific research across various fields including physics and engineering. Actively maintained with ongoing contributions from the community.

Advantages:

-

Extensive Functionality: Offers modules for optimization, integration, interpolation, eigenvalue problems, etc.

Disadvantages:

-

Complexity for Beginners: Can be overwhelming due to its breadth of functions.

Use Cases: Ideal for scientific computing tasks that require advanced mathematical functions beyond basic array operations provided by NumPy.

XGBoost

Type: Machine Learning (Gradient Boosting)

History: Developed by Tianqi Chen in 2014 as an efficient implementation of gradient boosting framework designed specifically for speed and performance improvements over existing implementations.

Real-World Use: Widely adopted in Kaggle competitions and used by companies like Airbnb and Alibaba. Continues to be actively developed with strong community support.

Advantages:

-

High Performance: Known for its speed and accuracy; often outperforms other algorithms on structured data.

Disadvantages:

-

Complexity of Tuning Parameters: Requires careful tuning of hyperparameters to achieve optimal performance.

Use Cases: Best suited for structured/tabular data classification or regression tasks where high performance is critical.

Dask-ML

Type: Machine Learning (Parallel Computing)

History: Dask was developed as an open-source project starting around 2016 aimed at parallel computing with larger-than-memory datasets using familiar Python interfaces like NumPy or Pandas.

Real-World Use: Used by companies needing scalable machine learning solutions on large datasets such as Anaconda Inc. It remains actively developed within the Dask ecosystem.

Advantages:

-

Scalability Across Clusters: Can handle larger-than-memory computations efficiently using parallel processing.

Disadvantages:

-

Learning Curve with Distributed Systems: Requires understanding of distributed computing concepts which may be complex for beginners.

Use Cases: Ideal when working with large datasets that cannot fit into memory or when distributed computing resources are available.

Matplotlib & Seaborn

Type: Data Visualization Libraries

History: Matplotlib was created by John Hunter in 2003, Seaborn was built on top of Matplotlib by Michael Waskom around 2013 to provide a higher-level interface for drawing attractive statistical graphics.

Real-World Use: Widely used across industries including academia and business analytics. Both libraries are actively maintained with strong community support.

Advantages:

-

Comprehensive Features (Matplotlib):

-

Highly customizable plots

-

Wide range of plotting options

-

Integration with other libraries

-

Attractive Statistical Graphics (Seaborn): Easy-to-use interface that simplifies complex visualizations

-

Disadvantages:

-

-

Steeper Learning Curve (Matplotlib): Customization can become complex due to numerous parameters

-

Limited Interactivity (Both): Basic plots may lack interactivity without additional tools

-

Use Cases: Ideal for exploratory data analysis where visual representation is crucial to understanding trends or patterns within data.

LightGBM

Type: Machine Learning (Gradient Boosting)

History: Developed by Microsoft Research in 2017 as a gradient boosting framework that uses tree-based learning algorithms designed specifically for efficiency on large datasets.

Real-World Use: Employed extensively in financial modeling at companies like Microsoft itself as well as numerous Kaggle competitions. Actively maintained with growing adoption among data scientists.

Advantages:

-

Speed & Efficiency: Faster training times compared to XGBoost due to its histogram-based algorithm.

Disadvantages:

-

Complexity in Hyperparameter Tuning: Requires careful tuning similar to XGBoost

Use Cases: Best suited when working with large datasets requiring fast training times while maintaining high accuracy.

CatBoost

Type: Machine Learning (Gradient Boosting)

History: Developed by Yandex researchers around 2017 specifically designed to handle categorical features without extensive preprocessing steps required by other gradient boosting algorithms like XGBoost or LightGBM.

Real World Use: Widely adopted across various industries including finance and e-commerce; used effectively at Yandex itself along with several Kaggle competition winners. Actively maintained with continuous updates from the community.

Advantages:

-

Handles categorical features natively without extensive preprocessing; provides robust performance on diverse datasets while being user-friendly compared to competitors.

Disadvantages:

-

May require tuning parameters similar to other gradient-boosting frameworks.

Use Cases: Ideal when working with datasets containing many categorical variables where preprocessing efforts can be minimized.

Fastai

Type: Deep Learning

History: Developed by Jeremy Howard & Rachel Thomas around 2018 as an extension of PyTorch aimed at making deep learning more accessible through higher-level abstractions while still retaining flexibility when needed.

Real World Use: Used extensively within educational contexts through courses offered online along with practical applications across industries leveraging its ease-of-use features; remains actively developed alongside PyTorch updates.

Advantages:

-

User-friendly API built on PyTorch allows rapid prototyping while providing powerful tools under the hood.

Disadvantages:

-

May abstract away too much complexity leading users unfamiliar with underlying mechanics.

Use Cases: Ideal when quick experimentation is needed alongside educational purposes where understanding concepts quickly is essential.

The choice between these libraries depends significantly on the specific requirements of a project, ranging from ease-of-use through Keras or Fastai suitable primarily for beginners looking into deep-learning applications while Scikit-Learn serves well traditional ML tasks requiring less complexity overall versus more advanced frameworks like TensorFlow/PyTorch suited towards larger-scale production scenarios needing robust performance capabilities across diverse domains ranging from NLP/computer vision down into structured/tabular datasets employing gradient boosting techniques via XGBoost/LightGBM/CatBoost depending upon dataset characteristics involved therein ultimately leading towards successful outcomes desired through effective utilization thereof!

Your comment